MULTIYEAR PROGRAM

CONFERENCE

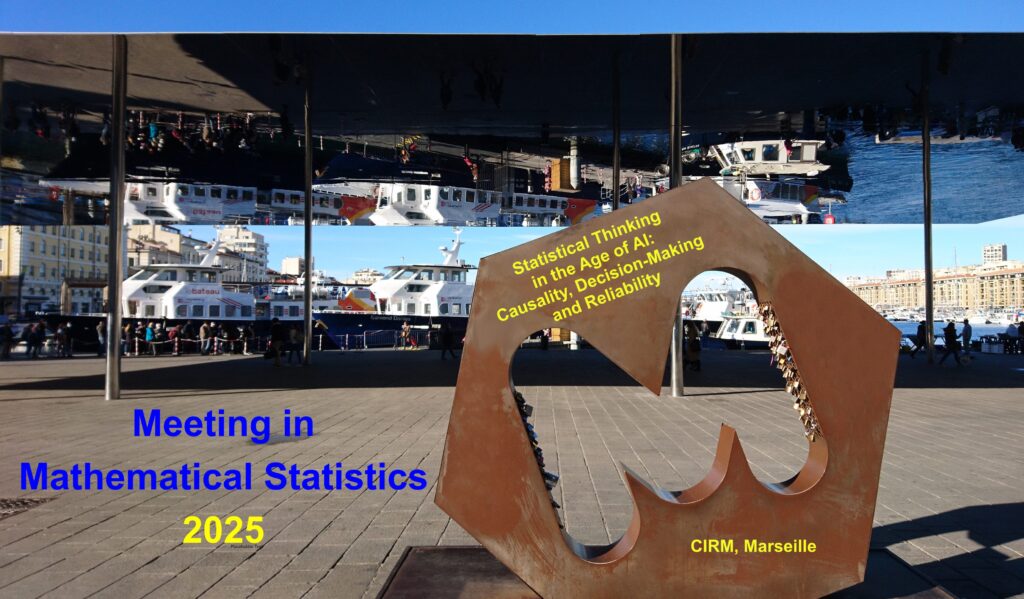

Meeting in Mathematical Statistics

Rencontres de Statistique Mathématique

Statistical thinking in the age of AI: decision-making and reliability

15 – 19 December 2025

Scientific Committee & Organizing Committee

Comité scientifique & Comité d’organisation

Olga Klopp (ESSEC, CREST)

Mohamed Ndaoud (ESSEC)

Christophe Pouet (École Centrale de Marseille)

Alexander Rakhlin (MIT)

We plan to dedicate the 2023 – 2025 series of conferences to challenges and emerging topics in the area of mathematical statistics driven by the adventure of artificial intelligence. Tremendous progress has been made in building up powerful machine learning algorithms such as random forests, gradient boosting or neural networks. These models are exceptionally complex and difficult to interpret but offer enormous opportunities in many areas of application going from science, public policies to business. These sophisticated algorithms are often called “black boxes” as they are very hard to analyze. The widespread use of such predictive algorithms raises extremely important questions of replicability, reliability, robustness or privacy protection. The proposed series of conferences is dedicated to new statistical methods built around these black-box algorithms that leverage their power but at the same time guarantee their replicability and reliability.

As for the first conference of the cycle, the objective of the last conference is to bring to Luminy theoretical computer scientists and mathematical statisticians to exchange around the topics of carefully quantifying decision-making algorithms and causal inference. These topics are of extreme importance in modern data science and we strongly believe that bringing together the two communities will advance the field and bring us closer to solving some of the key challenges such as causal inference in machine learning, selective inference, post hoc analysis and bridging decision-making and estimation.

Nous prévoyons de consacrer la série de conférences 2023 à 2025 aux défis et aux sujets émergents dans le domaine des statistiques mathématiques motivés par les avancées récentes en intelligence artificielle. D’énormes progrès ont été réalisés dans la conception d’algorithmes d’apprentissage statistique puissants tels que les forêts aléatoires ou les réseaux de neurones. Ces modèles sont exceptionnellement complexes et difficiles à interpréter mais offrent d’énormes opportunités dans de nombreux domaines d’application allant de la science aux politiques publiques en passant par le business. Ces algorithmes sophistiqués sont souvent appelés « boîtes noires » car ils sont très difficiles à analyser. L’utilisation généralisée de tels algorithmes prédictifs soulève des questions extrêmement importantes de réplicabilité, de fiabilité, de robustesse ou de protection de la vie privée. La série de conférences proposée est dédiée aux nouvelles méthodes statistiques construites autour de ces algorithmes, dit boîte noire, qui exploitent leur puissance tout en garantissant leur réplicabilité ainsi que leur fiabilité.

Comme pour la première conférence du cycle, l’objectif de la dernière conférence est d’amener à Luminy les informaticiens théoriciens et les statisticiens mathématiciens à échanger autour des thèmes de la quantification minutieuse des algorithmes de prise de décision et de l’inférence causale. Ces sujets sont d’une extrême importance dans la science des données moderne et nous croyons fermement que la réunion des deux communautés fera progresser le domaine et nous rapprochera de la résolution de certains défis clés tels que l’inférence causale dans l’apprentissage automatique, l’inférence sélective, l’analyse post hoc et le rapprochement de la prise de décision et de l’estimation.

TUTORIALS

Unsupervised learning is a central challenge in artificial intelligence, lying at the intersection of statistics and machine learning. The goal is to uncover patterns in unlabelled data by designing learning algorithms that are both computationally efficient—that is, run in polynomial time—and statistically effective, meaning they minimize a relevant error criterion.

Over the past decade, significant progress has been made in understanding statistical–computational trade-offs: for certain canonical « vanilla » problems, it is now widely believed that no algorithm can achieve both statistical optimality and computational efficiency. However, somewhat surprisingly, many extensions of these widely accepted conjectures to slightly modified models have recently been proven false. These variations introduce additional structure that can be exploited to bypass the presumed limitations.

In these talks, I will begin by presenting a vanilla problem for which a statistical computational trade-off is strongly conjectured. I will then discuss a specific class of more complex unsupervised learning problems—namely, ranking problems—in which extensions of the standard conjectures have been refuted, and I will aim to explain the underlying reasons why.

SPEAKERS

Randolf Altmeyer (Imperial College London) Bayesian nonparametrics for semi-linear stochastic PDEs

Denis Belomestny (University of Duisburg-Essen) Inverse Entropy-regularized RL

Victor-Emmanuel Brunel (Ensae Paris) Constrained M-estimation with convex loss and convex constraints

Yuxin Chen (University of Pennsylvania) Transformers Meet In-Context Learning: A Universal Approximation Theory

Sinho Chewi (Yale University) Toward ballistic acceleration for log-concave sampling

Mara Daniels (MIT) Splat Regression Models

Vincent Divol (Ensae Paris) Minimax spectral estimation of Laplace operators

Oliver Feng (University of Bath) Optimal estimation of monotone score functions

Jean Baptiste Fermanian (Université de Montpellier) Class conditional conformal prediction for multiple inputs by p-value aggregation

Marc Hoffmann (Université Paris Dauphine-PSL) Rainfall, volatility and roughness: an intriguing story across scales

Gil Kur (Eth Zurich) On the Convex Gaussian Minimax Theorem and Minimum-Norm Interpolation

Cheng Mao (Georgia Institute of Technology) Optimal detection of planted matchings via the cluster expansion

Theodor Misiakiewicz (Yale University) Learning Multi-Index Models via Harmonic Analysis

Maxim Panov (Mohamed Bin Zayed University of Artificial Intelligence) Rectifying Conformity Scores for Better Conditional Coverage

Reese Pathak (UC Berkeley) On the Gaussian width and the metric projections of Gaussians: new characterizations and minimaxity result

Nikita Puchkin (HSE University) Stochastic optimal control approach to generative modelling and Schrödinger potential estimation

Maxim Raginsky (University of Illinois Urbana-Champaign) Separating Geometry from Statistics in the Analysis of Generalization

Henry William Joseph Reeve (Nanjing University) Adaptive partial monitoring in non-stationary environments

Markus Reiß (Humboldt University of Berlin) On an open problem in statistics for SPDEs

Philippe Rigollet (MIT) The mean-field dynamics of transformers

Rajen Shah (University of Cambridge) Hunt and test for assessing the fit of semiparametric regression models

Zong Shang (Ensae Paris) Sharp convergence rates for spectral methods via the Feature Space Decomposition method

Runshi Tang (University of Wisconsin-Madison) Revisit CP Tensor Decomposition: Statistical Optimality and Fast Convergence

Mathias Trabs (Karlsruhe Institute of Technology) Non-parameteric estimation for linear SPDEs on arbitrary bounded domains based on discrete observations

Hemant Tyagi (Nanyang Technological University Singapore) Joint learning of a network of linear dynamical systems via total variation penalization

Yuting Wei (University of Pennsylvania) Accelerating Convergence of Score-Based Diffusion Models, Provably

Konstantin Yakovlev (HSE University) Generalization error bound for denoising score matching under relaxed manifold assumption

Anderson Ye Zhang (University of Pennsylvania) Sharp statistical guarantees for spectral methods